FICO Explainable ML: HELOC

Introduction

In this walkthrough, we'll use Xplainable's platform to analyze the FICO Home Equity Line of Credit (HELOC) dataset. This dataset is commonly used to predict credit risk, helping to identify borrowers who may be at risk of defaulting on their line of credit.

We'll go step-by-step through Xplainable's features, starting with data preprocessing, moving on to model training, and finally, interpreting the results. The goal is to show how Xplainable can simplify the process of building and understanding machine learning models, providing clear insights without the need for complex coding or deep statistical knowledge. By the end of this walkthrough, you'll see how Xplainable can help you quickly draw meaningful conclusions from the FICO HELOC dataset.

Install and import relevant packages

!pip install altair==5.4.1 #Upgrade this to work in Google Colab

!pip install xplainable-client

!pip install xplainable=1.2.3

!pip install kaggle

import pandas as pd

from sklearn.model_selection import train_test_split

import json

import requests

import os

import xplainable_client

import xplainable as xp

from xplainable.core import XClassifier

from xplainable.core.optimisation.bayesian import XParamOptimiser

1. Import CSV and Perform Data Processing

data = pd.read_csv('https://xplainable-public-storage.syd1.digitaloceanspaces.com/example_data/heloc_dataset.csv') # Set to False to skip Kaggle and use the local file

data.head()

| RiskPerformance | ExternalRiskEstimate | MSinceOldestTradeOpen | MSinceMostRecentTradeOpen | AverageMInFile | NumSatisfactoryTrades | NumTrades60Ever2DerogPubRec | NumTrades90Ever2DerogPubRec | PercentTradesNeverDelq | MSinceMostRecentDelq | ... | PercentInstallTrades | MSinceMostRecentInqexcl7days | NumInqLast6M | NumInqLast6Mexcl7days | NetFractionRevolvingBurden | NetFractionInstallBurden | NumRevolvingTradesWBalance | NumInstallTradesWBalance | NumBank2NatlTradesWHighUtilization | PercentTradesWBalance | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Bad | 55 | 144 | 4 | 84 | 20 | 3 | 0 | 83 | 2 | ... | 43 | 0 | 0 | 0 | 33 | -8 | 8 | 1 | 1 | 69 |

| 1 | Bad | 61 | 58 | 15 | 41 | 2 | 4 | 4 | 100 | -7 | ... | 67 | 0 | 0 | 0 | 0 | -8 | 0 | -8 | -8 | 0 |

| 2 | Bad | 67 | 66 | 5 | 24 | 9 | 0 | 0 | 100 | -7 | ... | 44 | 0 | 4 | 4 | 53 | 66 | 4 | 2 | 1 | 86 |

| 3 | Bad | 66 | 169 | 1 | 73 | 28 | 1 | 1 | 93 | 76 | ... | 57 | 0 | 5 | 4 | 72 | 83 | 6 | 4 | 3 | 91 |

| 4 | Bad | 81 | 333 | 27 | 132 | 12 | 0 | 0 | 100 | -7 | ... | 25 | 0 | 1 | 1 | 51 | 89 | 3 | 1 | 0 | 80 |

Where the defition of each of the fields are below:

| Variable Names | Description |

|---|---|

| RiskPerformance | Paid as negotiated flag (12-36 Months). String of Good and Bad |

| ExternalRiskEstimate | Consolidated version of risk markers |

| MSinceOldestTradeOpen | Months Since Oldest Trade Open |

| MSinceMostRecentTradeOpen | Months Since Most Recent Trade Open |

| AverageMInFile | Average Months in File |

| NumSatisfactoryTrades | Number of Satisfactory Trades |

| NumTrades60Ever2DerogPubRec | Number of Trades 60+ Ever |

| NumTrades90Ever2DerogPubRec | Number of Trades 90+ Ever |

| PercentTradesNeverDelq | Percent of Trades Never Delinquent |

| MSinceMostRecentDelq | Months Since Most Recent Delinquency |

| MaxDelq2PublicRecLast12M | Max Delinquency/Public Records in the Last 12 Months. See tab 'MaxDelq' for each category |

| MaxDelqEver | Max Delinquency Ever. See tab 'MaxDelq' for each category |

| NumTotalTrades | Number of Total Trades (total number of credit accounts) |

| NumTradesOpeninLast12M | Number of Trades Open in Last 12 Months |

| PercentInstallTrades | Percent of Installment Trades |

| MSinceMostRecentInqexcl7days | Months Since Most Recent Inquiry excluding the last 7 days |

| NumInqLast6M | Number of Inquiries in the Last 6 Months |

| NumInqLast6Mexcl7days | Number of Inquiries in the Last 6 Months excluding the last 7 days. Excluding the last 7 days removes inquiries that are likely due to price comparison shopping. |

| NetFractionRevolvingBurden | This is the revolving balance divided by the credit limit |

| NetFractionInstallBurden | This is the installment balance divided by the original loan amount |

| NumRevolvingTradesWBalance | Number of Revolving Trades with Balance |

| NumInstallTradesWBalance | Number of Installment Trades with Balance |

| NumBank2NatlTradesWHighUtilization | Number of Bank/National Trades with high utilization ratio |

| PercentTradesWBalance | Percent of Trades with Balance |

Seperate data into target (y) and features (x)

y = data['RiskPerformance']

x = data.drop('RiskPerformance',axis=1)

Create test and train datasets

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size=0.2, random_state=42, stratify=y)

2. Model Optimisation

Xplainable's XParamOptimiser fine-tunes the hyperparameters of our model. This

produces the most optimal parameters that will result in the best model performance.

y_train_df = pd.Series(y_train)

optimiser = XParamOptimiser(metric='f1-score',n_trials=300, n_folds=2, early_stopping=150)

params = optimiser.optimise(x_train, y_train_df)

3. Model Training

The XClassifier is trained on the dataset, with the optimised parameters.

model = XClassifier(**params)

model.fit(x_train, y_train)

4. Explaining and Interpreting the Model

Following training, the model.explain() method is called to generate insights into the

model's decision-making process. This step is crucial for understanding the factors that

influence the model's predictions and ensuring that the model's behaviour is transparent

and explainable.

model.explain()

Analysing Feature Importances and Contributions

Click on the bars to see the importances and contributions of each variable.

Feature Importances

The relative significance of each feature (or input variable) in making predictions. It indicates how much each feature contributes to the model’s predictions, with higher values implying greater influence.

Feature Significance

The effect of each feature on individual predictions. For instance, in this model, feature contributions would show how each feature (like the net fraction of trades revolving burden) affects the predicted risk estimate for a particular applicant.

5. Saving a model to the Xplainable App

In this step, we first create a unique identifier for our HELOC risk prediction model using client.create_model_id. This identifier, referred to as model_id, represents the newly created model that predicts the likelihood of applicants defaulting on their line of credit. After creating this model identifier, we generate a specific version of the model using client.create_model_version, passing in our training data. The resulting version_id represents this particular iteration of our model, allowing us to track and manage different versions systematically.

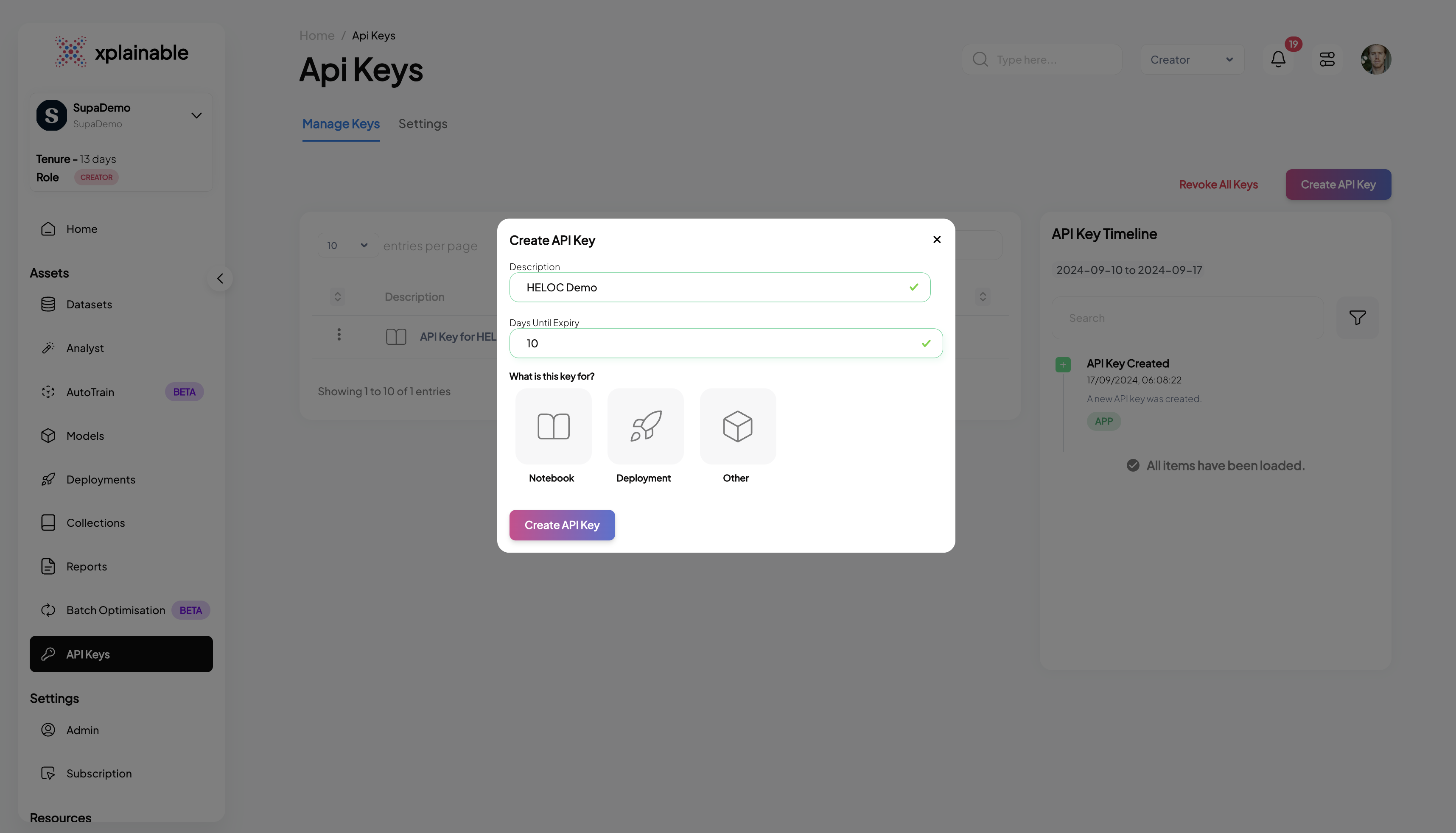

Creat an API key in the Xplainable platform

client = xplainable_client.Client(

api_key="",#Insert API Key here

)

# Create a model

model_id = client.create_model(

model=model,

model_name="HELOC Model",

model_description="Predicting applicant risk estimates",

x=x_train,

y=y_train

)

Xplainable App Model View

As you can see in the screenshot, now the model has been saved to Xplainable's webapp, allowing yourself and other members in your organisation to visually analyse the model.

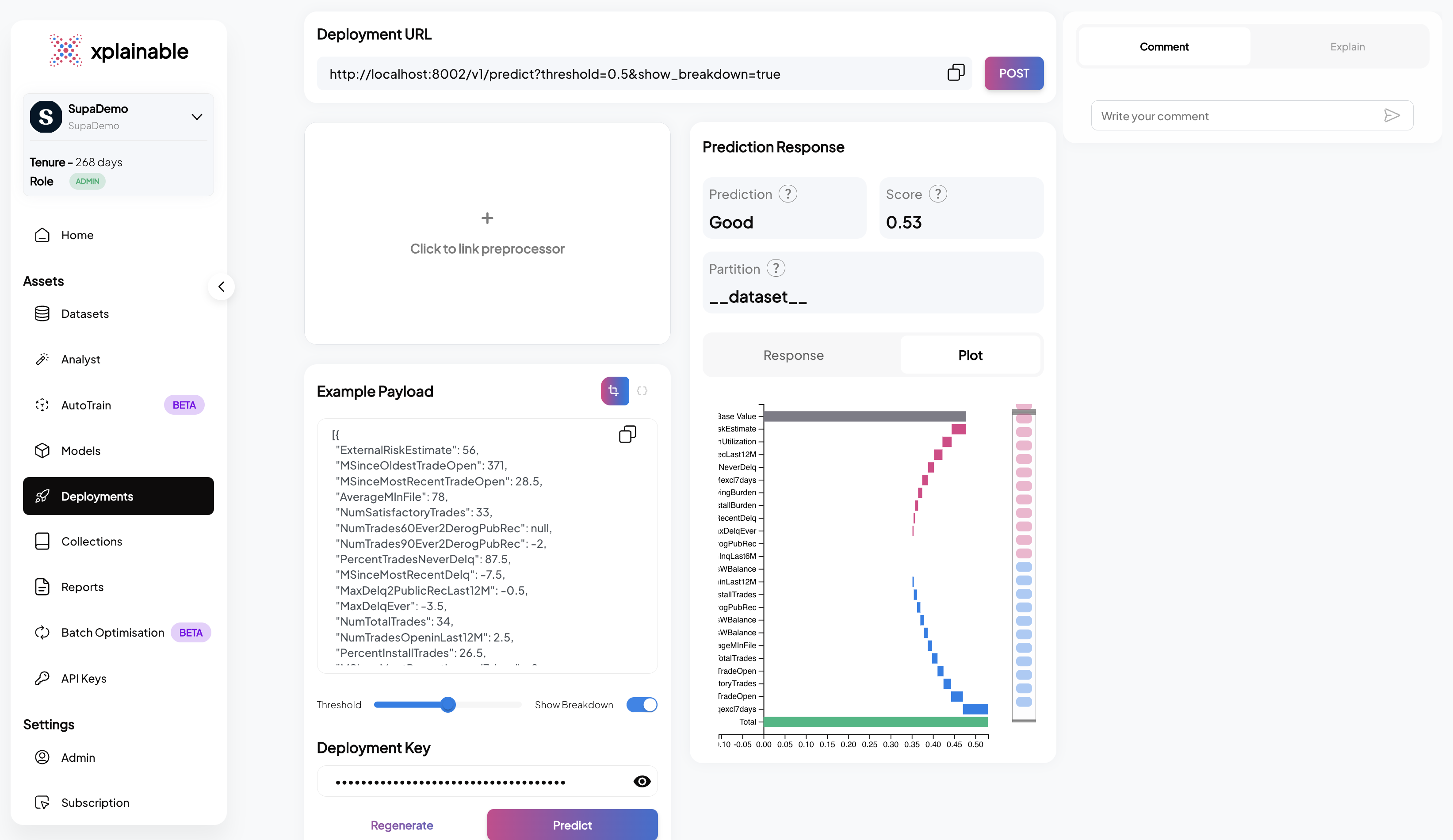

6. Deployments

The code block illustrates the deployment of our churn prediction model using the xp.client.deploy function. The deployment process involves specifying the hostname of the server where the model will be hosted, as well as the unique model_id and version_id that we obtained in the previous steps. This step effectively activates the model's endpoint, allowing it to receive and process prediction requests. The output confirms the deployment with a deployment_id, indicating the model's current status as 'inactive', its location, and the endpoint URL where it can be accessed for xplainable deployments.

model_id

deployment = client.deploy(

model_version_id=model_id["version_id"]

)

deployment

Testing the Deployment programatically

This section demonstrates the steps taken to programmatically test a deployed model. These steps are essential for validating that the model's deployment is functional and ready to process incoming prediction requests.

- Activating the Deployment: The model deployment is activated using

client.activate_deployment, which changes the deployment status to active, allowing it to accept prediction requests.

client.activate_deployment(deployment['deployment_id'])

- Creating a Deployment Key: A deployment key is generated with

client.generate_deploy_key. This key is required to authenticate and make secure requests to the deployed model.

deploy_key = client.generate_deploy_key(deployment['deployment_id'],'HELOC Deploy Key', 7)

- Generating Example Payload: An example payload for a deployment request is

generated by

client.generate_example_deployment_payload. This payload mimics the input data structure the model expects when making predictions.

#Set the option to highlight multiple ways of creating data

option = 2

if option == 1:

body = client.generate_example_deployment_payload(deployment['deployment_id'])

else:

body = json.loads(data.drop(columns=["RiskPerformance"]).sample(1).to_json(orient="records"))

body

- Making a Prediction Request: A POST request is made to the model's prediction endpoint with the example payload. The model processes the input data and returns a prediction response, which includes the predicted class (e.g., 'No' for no churn) and the prediction probabilities for each class.

response = requests.post(

url="https://inference.xplainable.io/v1/predict",

headers={'api_key': deploy_key['deploy_key']},

json=body

)

value = response.json()

value

SaaS Deployment Info

The SaaS application interface displayed above mirrors the operations performed programmatically in the earlier steps. It displays a dashboard for managing the 'Telco Customer Churn' model, facilitating a range of actions from deployment to testing, all within a user-friendly web interface. This makes it accessible even to non-technical users who prefer to manage model deployments and monitor performance through a graphical interface rather than code. Features like the deployment checklist, example payload, and prediction response are all integrated into the application, ensuring that users have full control and visibility over the deployment lifecycle and model interactions.